Mosseri claims digital camera companies are on the wrong path.

Mosseri claims digital camera companies are on the wrong path.

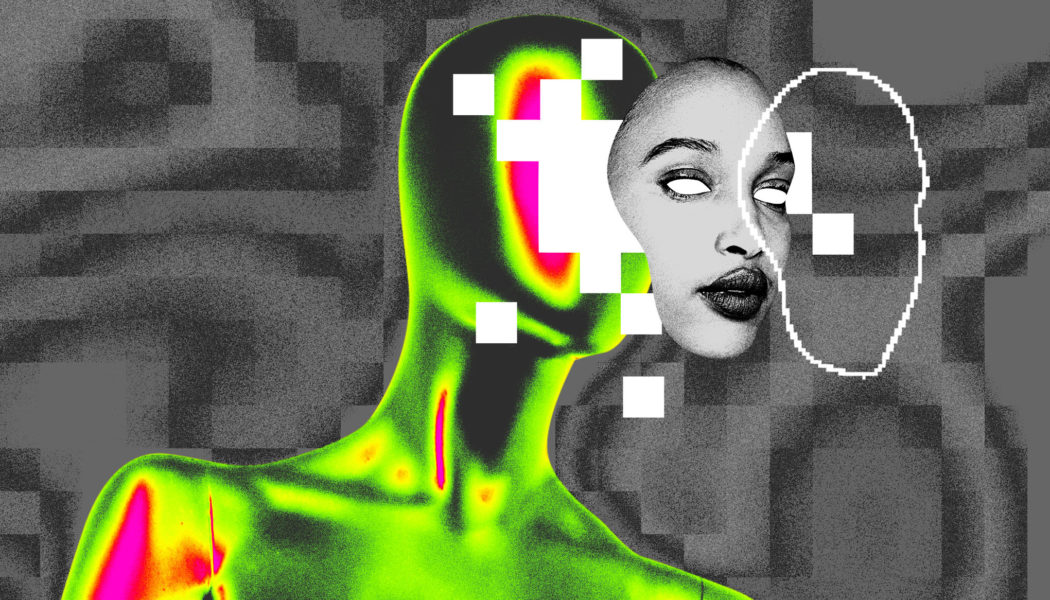

Instagram boss Adam Mosseri is closing out 2025 with a 20-images-deep dive into what a new era of “infinite synthetic content” means as it all becomes harder and harder to distinguish from reality, and the old, more personal Instagram feed that he says has been “dead” for years. Last year, The Verge’s Sarah Jeong wrote that “…the default assumption about a photo is about to become that it’s faked, because creating realistic and believable fake photos is now trivial to do,” and Mosseri eventually concurs:

For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it’s going to take us years to adapt.

We’re going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable – we’re genetically predisposed to believing our eyes.

You can read the full text from his slideshow at the bottom of this post, but according to Mosseri, the evolution required of Instagram and other platforms is that “We need to build the best creative tools. Label AI-generated content and verify authentic content. Surface credibility signals about who’s posting. Continue to improve ranking for originality.”

Our readers and listeners know we’ve spent the last few years discussing the “what is a photo?” apocalypse arriving in the form of AI image editing and generation. Now, as we hurtle into 2026, it feels a little late to lay out a thin list of proposals.

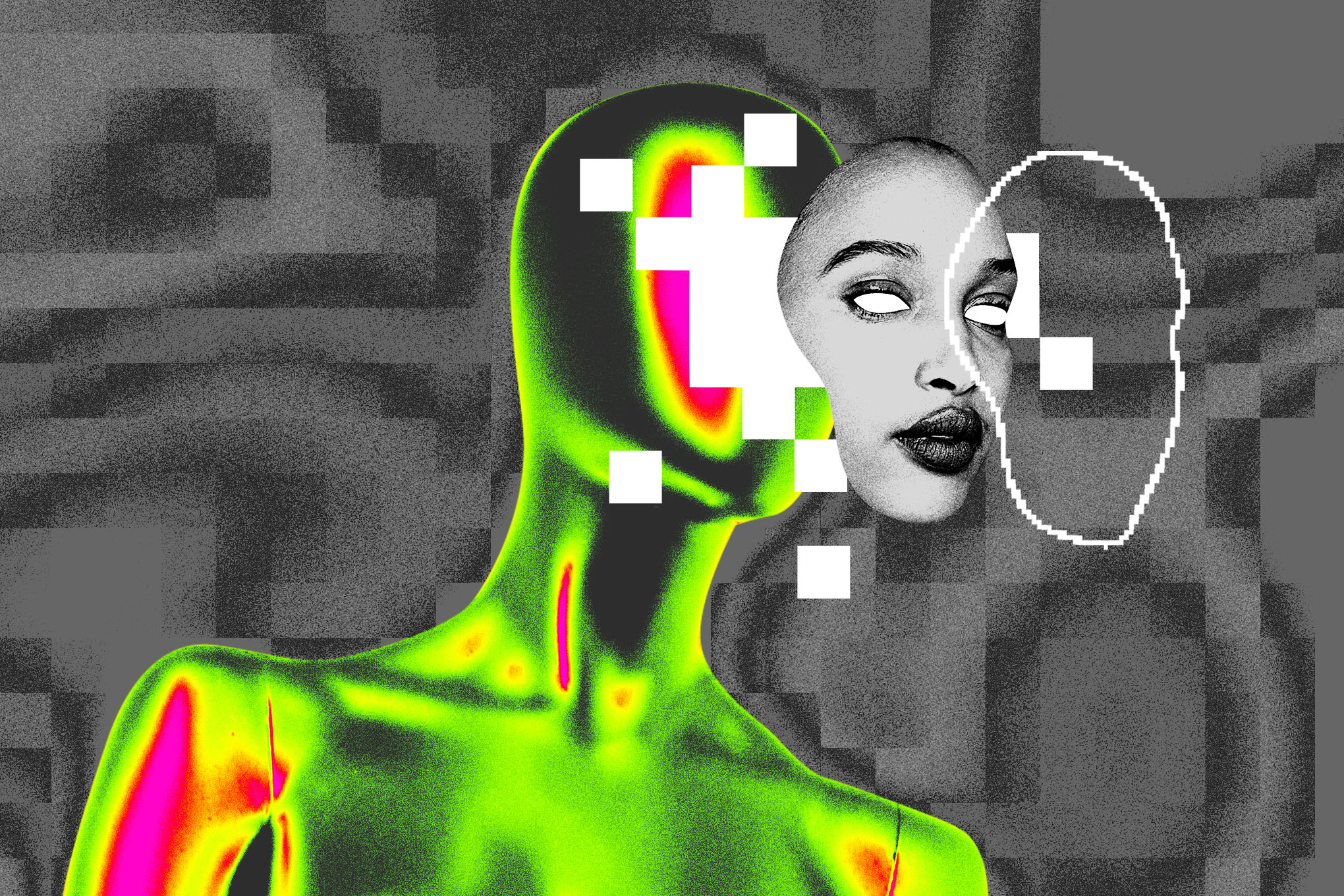

Mosseri’s Instagram-centered view of the whole thing claims that “We like to complain about ‘AI slop,’ but there’s a lot of amazing AI content,” without specifically identifying any of it, or specifically mentioning Meta’s push for AI tools. He claims camera companies are going the wrong way by trying to give everyone the ability to “look like a pro photographer from 2015.”

Instead, he says raw, unflattering images are, temporarily, a signal of reality, until AI is able to copy imperfections too. Then “we’ll need to shift our focus to who says something instead of what is being said,” with fingerprints and cryptographic signing of images from the cameras that took them to ID real media instead of relying on tags and watermarks added to AI.

Mosseri is far from the first tech exec to point toward the same issue. Samsung exec Patrick Chomet took the approach that “actually, there is no such thing as a real picture,” after controversies last year over the Galaxy phones’ approach to Moon photography, and Apple’s Craig Federighi told the WSJ he’s “concerned” about the impact of AI editing. But hey, maybe we’re just another Instagram slideshow or two away from figuring all of this out.

Adam Mosseri:

The key risk Instagram faces is that, as the world changes more quickly, the platform fails to keep up. Looking forward to 2026, one major shift: authenticity is becoming infinitely reproducible.

Everything that made creators matter-the ability to be real, to connect, to have a voice that couldn’t be faked-is now accessible to anyone with the right tools. Deepfakes are getting better. Al generates photos and videos indistinguishable from captured media.

Power has shifted from institutions to individuals because the internet made it so anyone with a compelling idea could find an audience. The cost of distributing information is zero.

Individuals, not publishers or brands, established that there’s a significant market for content from people. Trust in institutions is at an all-time low. We’ve turned to self-captured content from creators we trust and admire.

We like to complain about “AI slop,” but there’s a lot of amazing AI content. Even the quality AI content has a look though: too slick, skin too smooth. That will change – we’re going to see more realistic AI content.

Authenticity is becoming a scarce resource, driving more demand for creator content, not less. The bar is shifting from “can you create?” to “can you make something that only you could create?”

Unless you are under 25, you probably think of Instagram as feed of square photos: polished makeup, skin smoothing, and beautiful landscapes. That feed is dead. People stopped sharing personal moments to feed years ago.

The primary way people share now is in DMs: blurry photos and shaky videos of daily experiences. Shoe shots. and unflattering candids.

This raw aesthetic has bled into public content and across artforms.

The camera companies are betting on the wrong aesthetic. They’re competing to make everyone look like a pro photographer from 2015. But in a world where AI can generate flawless imagery, the professional look becomes the tell.

Flattering imagery is cheap to produce and boring to consume.

People want content that feels real. Savvy creators are leaning into unproduced, unflattering images. In a world where everything can be perfected, imperfection becomes a signal.

Rawness isn’t just aesthetic preference anymore — it’s proof. It’s defensive. A way of saying: this is real because it’s imperfect.

Relatively quickly, AI will create any aesthetic you like, including an imperfect one that presents as authentic. At that point we’ll need to shift our focus to who says something instead of what is being said.

For most of my life I could safely assume photographs or videos were largely accurate captures of moments that happened. This is clearly no longer the case and it’s going to take us years to adapt.

We’re going to move from assuming what we see is real by default, to starting with skepticism. Paying attention to who is sharing something and why. This will be uncomfortable – we’re genetically predisposed to believing our eyes.

Platforms like Instagram will do good work identifying AI content, but they’ll get worse at it over time as AI gets better. It will be more practical to fingerprint real media than fake media.

Camera manufacturers will cryptographically sign images at capture, creating a chain of custody.

Labeling is only part of the solution. We need to surface much more

context about the accounts sharing content so people can make informed decisions. Who is behind the account?

In a world of infinite abundance and infinite doubt, the creators who can maintain trust and signal authenticity – by being real, transparent, and consistent – will stand out.

We need to build the best creative tools. Label AI-generated content and verify authentic content. Surface credibility signals about who’s posting. Continue to improve ranking for originality.

Instagram is going to have to evolve in a number of ways, and fast.